teleparser

Sometimes you need something open... This post briefly introduces the teleparser script, whose goal is to parse the Telegram cache4.db. Honestly speaking, I would have done something else, but the coding (better, decoding) job was born with a real case few months ago.

Suppose you have a truly important cache4.db, a file containing every non-deleted and synced chat of the suspect, together with his encrypted p2p chats. Suppose that all the major well known commercial solutions are unable to properly parse that database: or, if able, they provide slightly different results. Again, the db content represents a crucial evidence. On which tools' outputs, if any, would you rely on to report evidences?

That's a classical example where the digital investigator must be able to explain every single bit there (theoretically, he should always be). Point is, the cache4.db is not just a simple SQLite database, but it's a SQLite database containing a lot of binaries blobs. Guess what? All the juicy information reside there!

I'm not speaking of something unknown: probably the best paper written about Telegram cache4.db parsing is "Forensic analysis of Telegram Messenger on Android smartphones" by Cosimo Anglano, Massimo Canonico and Marco Guazzone (Digital Investigation 23: 31-49, 2017). I strongly suggest you to read the paper, it's very well written and it provides tons of information. Moreover, if you want to understand the teleparser code, that's mandatory.

Prof. Anglano is a very well known academic, and I know him personally: the quality of the paper has nothing to do with the latter, it's simply well written, test it by yourself. The only issue is: they do not release any code... let me say unfortunately... but after having dealt with the parsing I figured out a couple of reasons... it's quite huge (I remember me at the phone with Cosimo, "ok, I'll write it then"... silence is golden...).

In the end I took the case and I slowly started to dig into the Telegram code: thanks is not obfuscated.

Blobs, blobs everywhere!

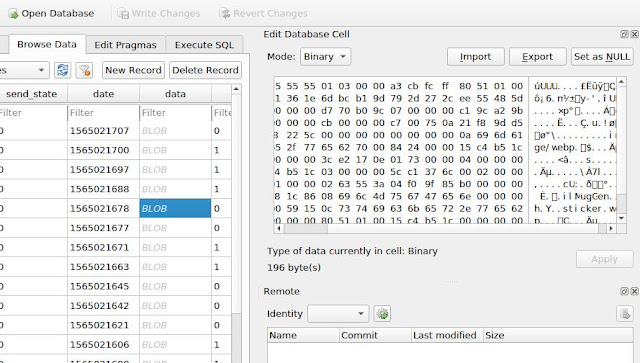

Basically every table inside cache4.db has a column (or more than one) with blobs: in the next picture is partially reported a message from the messages table. The table itself contains useful fields, but the most important contents are stored in the column data's blobs.

In the picture it's possible to see some ASCII (UTF8) readable strings but don't be fooled: strings will not allow you to go very far. What are those blobs?

It happens in Telegram, as figured out in the Cosimo's paper, they like serialization a lot (oh well, everyone does!). Basically everything is serialized with a specific structure: even true and false got their own.

Partially visible in the previous picture are the first 4 bytes (32bits): 0xfa 0x55 0x55 0x55, 0x555555fa reading it little-endian, 1431655930 in decimal form. This integer represents the object type, in this case a TL_message_secret: this is what we have in front of us.

Jumping inside the Telegram code (I'll never be able to thank enough Skylot for his immense jadx) it's possible to get the source of serialization and de-serialization of that specific object.

Honestly, well organized. As shown in the picture, we have nested objects, for example an array of entities. Basically the Telegram code will compose the objects dynamically, depending on their content, flags, and so on. Being said that, even True/False have their own objects (0x997275b5 and 0xbc799737, respectively): it's not hard to understand that those blobs will vary quite often... How much? Telegram version 5.5.0 has no less than 1340 objects and the java code related to them counts around 42.000 lines... good luck... but there is more.

As every other application, Telegram adds new features, which often means new objects with new id(s). To guarantee retro-compatibility, so to allow different Telegram applications to exchange messages, their code must be able to handle multiple (e.g.) message instances: in version 5.6.2 there are ~24 different message instances (this is by the way where the well written Telegram code becomes a bit dirty since it uses many switch statements... and it got me mad in the first instance...).

Finally, they change and remove features too, so they remove objects and/or they change the objects (and their id). Can you figure out the mess when you have to handle all the possible versions? Objects going, coming, changing... It's easy to figure out why all the well-known commercial tools failed to parse that specific version some months ago: now they do a proper job (not all of them...), but still we don't know how they work...

Ok, I quit the bla bla bla: if you're here, it means you have read everything (or just jumped to this word: the code man...). [I started to think that's better to provide few details in this kind of blog posts, because there exists the code: if someone is truly interested in understanding those blobs, he will use the code... and do not forgive the paper].

teleparser

To be able to provide a (sort of) knowledgeable answer to my real case, I wrote a Python script called teleparser. The tool will parse the cache4.db database and it will create a txt file for each table it parses (not every table, my bias sorry).

The goal is to extract every single piece of information from the tables and their blobs, and to represent them in a semi-human readable way. Basically each of those textual files (one per table) will print out all the blobs de-serialized and with a minimal context. It's not the best output ever, I admit, but the scenario is having the usual commercial tools available and being able to validate their output... eventually to find what they missed or what it's hard to render to the screen (this is not an easy task as it could seem).

Unfortunately my friend Mattia was quite unhappy (to say the least) with that output... how could I say no to a friend (I can very well do... but he knows how to challenge me...)? So I added a bit of logic to create a csv file with most of the data, without pretending to be thorough... Still it works quite well, even if it's not the goal of the script.

Just to stress out the concept... I don't want to miss data or to misinterpret it: the script should crash (only as a python script would do) if what it's parsing is not what is expected. That's the main goal. So please expect it to crash if the version is not (yet) supported... then please go on github and share whatever is possible to get the script updated.

The code is hosted at https://github.com/RealityNet/teleparser with a few instructions.

conclusion

In the end it took some hours to create the script, but I got everything from the cache4 database in front of me. Open source is not just a way to not pay a commercial tool (quite impossible to avoid having them), but to be able to understand and to explain your evidences in a truly verifiable and objective way. A DFIR expert cannot rely on sentences like 'it was the tool', not for critical evidences at minimum.

Comments

Post a Comment